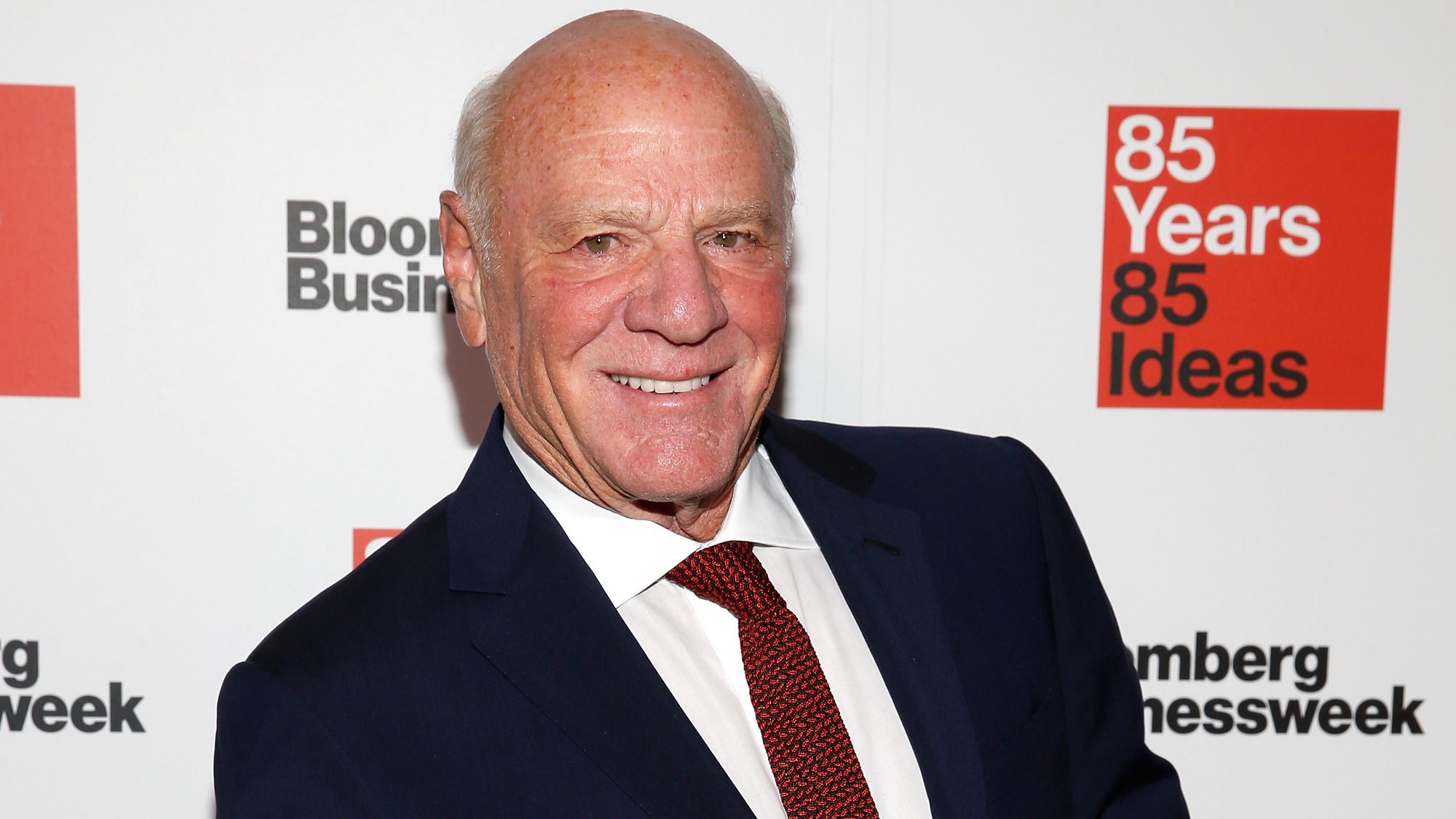

Barry Diller, AI, and Copyright: Media, Legal, and Regulatory Implications in the UK Context

Barry Diller is a figure of long-standing influence in the international media landscape. As the current chairman and senior executive of IAC (InterActiveCorp) and chairman of the Expedia Group, Diller’s career spans across iconic roles in film, television, and digital innovation. From helping architect Fox Broadcasting and transforming Paramount Pictures, to founding startups like Aereo, and now becoming a vocal advocate on Artificial Intelligence (AI) and copyright protection, Diller’s views reverberate far beyond American media.

In recent years, Diller has turned his attention towards the complexities of copyright law in the age of generative AI. He is leading a coalition of prominent media stakeholders including News Corp and Axel Springer, challenging AI developers over the unlicensed use of copyrighted journalism in model training. While the debate is largely framed in a US context, the ramifications for the UK are increasingly relevant. As the UK navigates rapid AI development within a mainly “pro-innovation” legal framework, Diller’s advocacy raises critical questions about how copyright, media rights, and regulatory oversight should evolve.

This article explores Barry Diller’s career and current views, especially on the legality and implications of content use by AI models. With special attention to the United Kingdom’s legal framework, responsible regulators, and media environment, we examine what is at stake for publishers, technology companies, and the public.

What Is at Stake: Definitions and Key Concepts

At the heart of Barry Diller’s AI-related concerns lies the intersection of two areas of law and technology—copyright protection and artificial intelligence training. Generative AI models need vast datasets to learn how to produce text, images, or even video. These datasets are typically drawn from publicly available internet content, including journalistic articles, books, and posts—many of which are protected under copyright.

In legal terms:

Copyright is the legal right that grants creators of original works exclusive control over the use of their material for a certain period. This includes literary works, news articles, films, broadcasts, and online publications.

AI Content Training refers to the process of ingesting large datasets—sometimes copyrighted—to train artificial intelligence models. This could include text-based learning models like OpenAI’s ChatGPT, Google’s Gemini, or Meta’s LLaMA.

Fair Use (US) vs Fair Dealing (UK): In the US, “fair use” allows broader exceptions for using copyrighted material without permission, especially under educational, research, or commentary purposes. In the UK, “fair dealing” is more limited and allows limited use for purposes such as criticism, review, or news reporting.

Diller criticises AI developers, such as OpenAI, for bypassing these legal principles by using copyrighted journalism and content without licensing, paywalls, or attribution.

This growing tension also amplifies broader institutional challenges in UK protectionist policy related to digital markets, where concerns over copyright and content monetisation are driving renewed scrutiny. https://www.mypoliticalhub.com/uncategorized/protectionism-uk-policies-explained/

Barry Diller: Career Overview and Media Legacy

Barry Diller’s reputation was built over decades at the helm of major media transformations. His career highlights reflect a willingness to disrupt established models:

-

Paramount Pictures (1974–1984): As Chairman, Diller implemented a “creative conflict” system, inspiring a culture of innovation over conventional submission deals. Under his leadership, the studio outperformed its rivals with classics such as “Raiders of the Lost Ark” and “Saturday Night Fever”.

-

Fox Inc. and Fox Broadcasting (1984–1992): Diller launched America’s fourth major cable network—Fox—defying scepticism and collaborating with Rupert Murdoch. This bold move reshaped American television by giving rise to programmes such as The Simpsons and COPS.

-

Aereo (2013): Diller backed this startup with intentions to stream broadcast television over the internet without traditional cable subscriptions. Though the US Supreme Court ruled against Aereo in 2014 following lawsuits by major broadcasters, it set a precedent for digital TV disruption.

-

IAC and Expedia: As current chairman, Diller oversees vast media properties and travel platforms. He remains an outspoken critic of the changing media landscape, including a public rebuke of Rupert Murdoch’s Fox News after the network’s $787 million Dominion defamation settlement.

His editorial and strategic disruptions are echoed now in his opposition to AI’s unregulated consumption of copyrighted content. Other public commentators, such as Ricky Gervais, have also weighed in on adjacent debates around media, copyright, and speech boundaries in the UK, albeit from a cultural angle. https://www.mypoliticalhub.com/uncategorized/ricky-gervais-politics-explained/

A Timeline of Barry Diller’s Key Milestones

To better understand the breadth of Diller’s influence, consider the following table that outlines his major achievements and shifts in media strategy:

| Year | Event | Significance |

|---|---|---|

| 1974–1984 | Chairman, Paramount Pictures | Revitalised studio using dynamic creative processes |

| 1984–1992 | Chairman & CEO, Fox Inc. | Launched fourth US TV network; high audience reach |

| 2013 | Supporter of Aereo | Attempted to legally stream over-the-air television via internet |

| 2020–Present | Advocate on AI and Copyright | Formed coalition to push back against unlicensed AI training data usage |

This progression underlines Diller’s shift from media executive to techno-legal crusader.

How It Works: AI, Content Training, and Legal Loopholes

In training generative AI models, developers rely on data harvested from websites, often indiscriminately scraped. News articles, books, and commentaries—many proprietary—are absorbed into models which then reproduce or summarise them as “original” outputs to users. Since most of this content resides behind paywalls or copyrights, creators like Diller argue this undermines their business model and intellectual property rights.

The conflict hinges on interpretation of legal exceptions like “fair use” in the US and “fair dealing” in the UK. Diller claims that these outdated principles do not account for AI’s scale and impact. For example, while a human quoting a news paragraph under “fair dealing” may qualify as lawful, a machine ingesting millions of articles to build a learning index does not obviously fall under the same umbrella.

Legal opinion in the UK generally aligns with this careful approach. There is no UK law that permits broad copyright exemptions for machine learning data acquisition. Instead, the 2023 AI White Paper takes a “pro-innovation” stance. Rather than passing concrete legislation, the UK empowers existing regulators to guide sector-specific issues.

This decentralised and somewhat voluntary model echoes sentiments explored in coverage of ideological and cultural autonomy, such as those seen in debates around the “New World Order” and questions of global governance. https://www.mypoliticalhub.com/uncategorized/new-world-order-politics-explained/

UK Authorities Governing AI and Copyright

Given the UK’s decentralised approach to AI governance, several bodies play roles depending on the sector or law in question:

- Information Commissioner’s Office (ICO) – Oversees data protection and privacy, key when AI uses personal data during training.

- Competition and Markets Authority (CMA) – Regulates anti-competitive practices and may assess AI monopolisation of content.

- Financial Conduct Authority (FCA) – Monitors uses of AI within financial markets, notably algorithmic trading.

These regulators follow guidance arising from the 2023 AI White Paper, which did not propose a stand-alone AI Act. Instead, sector-specific codes and audits are encouraged.

Risks and Warning Signs

The risks associated with AI’s use of copyright-protected data are evident both economically and legally. Diller’s coalition seeks legal remedies not only to protect its content but to challenge the unlicensed growth of AI.

Some of the key risks include:

- Loss of Revenue and Control: Publishers spend resources producing content. When AI models replicate this without payment or attribution, the value chain collapses.

- Intellectual Property Infringement: Use of protected works without consent potentially breaches both UK and US IP laws.

- Litigation Exposure: Just as Diller’s Aereo venture was halted by a US Supreme Court decision, AI firms may face similar lawsuits over content use.

- Reputational Damage: As seen in Fox News’s settlement over election misinformation, content providers and platforms that fail to oversee usage can face legal and financial consequences.

- Consumer Misleading: If AI-generated content paraphrases journalism without transparency about sources, there can be rises in misinformation and declining public trust.

These consequences also mirror the reputational risks faced by political and public figures whose engagement with media misinformation—whether through commentary or platforms—raises similar ethical questions. https://www.mypoliticalhub.com/uncategorized/who-is-scott-adams-politics/

Who Is Affected

The implications of this debate are expansive and impact multiple audiences:

- News and Media Publishers: Especially at risk of being scraped and reproduced by AI systems, diminishing their revenue models.

- Technology Firms: Especially AI developers using large-scale data mining to train models may face regulatory scrutiny.

- Regulators and Legislators: Must now assess if existing laws on copyright and data protection are sufficient in current technological contexts.

- Consumers: Could be misled by AI-generated repurposed content or suffer from a degraded media environment hurting journalistic quality.

Recommendations and Best Practices

Barry Diller’s proposed path forward is regulatory clarity backed by litigation. For UK stakeholders, strategic caution and proactive engagement could reduce legal exposure.

Here are some specific recommendations inspired by Diller’s advocacy and UK policy trends:

- Publishers should implement robot.txt and technical blocks on unlicensed web scraping to protect their content where legally possible.

- AI developers need to seek licensing agreements upfront with content providers or risk lengthy litigation and reputational harm.

- Regulators should issue updated guidelines on how AI training relates to copyright and fair dealing, ensuring clarity.

- Journalism industry groups could form UK coalitions mirroring Diller’s to represent publisher interests during consultations.

- Consumers must scrutinise AI-generated content, especially when it lacks proper attribution or originates from sensitive data.

As AI increasingly blends with media, law, and economics, the views of long-time disruptors like Barry Diller carry critical weight. His insistence on the importance of creative ownership and fair commerce in content is not simply nostalgic; it is a vital framework to ensure that news and information continue to serve the public in democratic and transparent ways.

In the UK’s context, where AI law remains decentralised and largely voluntary in nature, Diller’s advocacy serves as both a warning and a roadmap. Stakeholders across technology, media, and government must collaborate thoughtfully, balancing innovation with protection. Whether through licensing regimes, updated fair dealing definitions, or new sector-specific codes, the actions taken in the next five years will define the media landscape for generations to come.